Have you ever wondered how search engines magically find and display the most relevant web pages when you type in a query? The secret lies in two crucial processes:

crawling and indexing. These are the foundation of

Search Engine Optimization (SEO) and play a vital role in determining how visible your website is in search results.

We’ve all experienced the frustration of creating great content only to have it lost in the vast sea of the internet. Without proper crawling and indexing, even the most valuable information can remain hidden from potential readers. But fear not! Understanding these processes can help you unlock the full potential of your website and skyrocket its visibility.

In this blog post, we’ll dive deep into the world of crawling and indexing in SEO. We’ll explore how search engines work, the fundamentals of crawling, and the intricacies of indexing. Plus, we’ll share practical tips on how to guide search engines through your site effectively. By the end, you’ll have a solid grasp of these concepts and be ready to optimize your website for better search engine performance. Let’s embark on this

SEO journey together!

How do Search Engines Work?

What is Search Engine Crawling?

What is Search Engine Crawling?

Search engine crawling is the process by which search engines discover and collect information about web pages. We can think of it as the first step in the search engine’s journey to understand and organize the vast amount of information available on the internet.

When we talk about crawling, we’re referring to automated programs, often called “spiders” or “bots,” that systematically browse the web. These bots follow links from one page to another, much like we might click through various pages while browsing the internet.

Here’s a simplified breakdown of the crawling process:

- Starting point: Search engines begin with a list of known web addresses

- Visiting pages: Bots access these pages and read their content

- Following links: They discover new pages by following links

- Updating database: Information about these pages is stored for later use

| Crawling Step |

Purpose |

| Discovery |

Find new and updated web pages |

| Retrieval |

Fetch page content and metadata |

| Processing |

Analyze page structure and links |

| Storage |

Save relevant information for indexing |

What’s That Word Mean?

When we dive into the world of search engines, we encounter various technical terms. Let’s clarify some key vocabulary related to crawling:

- Spider/Bot: The automated program that crawls websites

- Crawl budget: The number of pages a search engine will crawl on your site in a given time period

- Robots.txt: A file that tells search engines which pages or sections of your site to crawl or not crawl

- Sitemap: A file that lists all the important pages on your website to help search engines crawl more efficiently

- Crawl rate: The speed at which search engines crawl your site

Understanding these terms is crucial for optimizing our websites for search engines. By managing how search engines crawl our sites, we can improve our visibility in search results and ensure that our most important content is discovered and indexed.

The Fundamentals of Crawling for SEO – Whiteboard Friday

What is a Search Engine Index?

A search engine index is like a vast digital library that stores and organizes

web pages. When we talk about indexing, we’re referring to the process where search engines add web pages to their database. This index is crucial for quickly delivering relevant search results to users.

Here’s a breakdown of how indexing works:

- Crawling: Search engines discover web pages

- Processing: They analyze the content and metadata

- Storing: Relevant information is added to the index

- Retrieval: The index is used to serve search results

| Index Component |

Description |

| URLs |

Unique addresses of web pages |

| Content |

Text, images, and other media |

| Metadata |

Title tags, descriptions, and schema |

| Link data |

Internal and external links |

Search Engine Ranking

Once pages are indexed, search engines use complex algorithms to determine their ranking in search results. We consider various factors when optimizing for better rankings:

- Relevance to the search query

- Content quality and depth

- User experience signals

- Page load speed

- Mobile-friendliness

- Backlink profile

In SEO, Not All Search Engines are Equal

While

Google dominates the search market, it’s important to remember that other search engines exist and may be relevant depending on your target audience. Here’s a comparison of major search engines:

| Search Engine |

Market Share |

Key Features |

| Google |

~92% |

Advanced AI, vast index |

| Bing |

~3% |

Integration with Microsoft products |

| Yahoo |

~1.5% |

News and email integration |

| DuckDuckGo |

<1% |

Privacy-focused |

We focus primarily on Google due to its market dominance, but it’s wise to keep an eye on other search engines’ best practices as well. Now that we understand the basics of crawling and indexing, let’s explore how search engines find your pages in more detail.

Crawling: Can Search Engines Find Your Pages?

Crawling Process

We begin our exploration of search engine crawling by understanding how these digital spiders navigate the web. Search engines use automated programs called “crawlers” or “spiders” to discover and analyze web pages. These crawlers follow links from one page to another, building a map of the internet as they go.

Factors Affecting Crawlability

Several factors influence how easily search engines can find and crawl your pages:

- Site Structure

- Internal Linking

- XML Sitemaps

- Robots.txt File

- Page Load Speed

Let’s take a closer look at each of these factors in the following table:

| Factor |

Impact on Crawlability |

| Site Structure |

A clear, logical hierarchy helps crawlers navigate your site efficiently |

| Internal Linking |

Proper internal linking ensures all pages are discoverable |

| XML Sitemaps |

Provides a roadmap of your site’s content for crawlers |

| Robots.txt |

Guides crawlers on which pages to access or avoid |

| Page Load Speed |

Faster-loading pages are crawled more frequently |

Common Crawling Issues

To ensure search engines can find your pages, we need to address common crawling issues:

- Broken links

- Duplicate content

- Orphaned pages

- Excessive redirects

- Blocked resources

By addressing these issues, we can significantly improve our site’s crawlability. Now that we understand how search engines find our pages, let’s explore how to guide their crawling process effectively.

Tell Search Engines How to Crawl Your Site

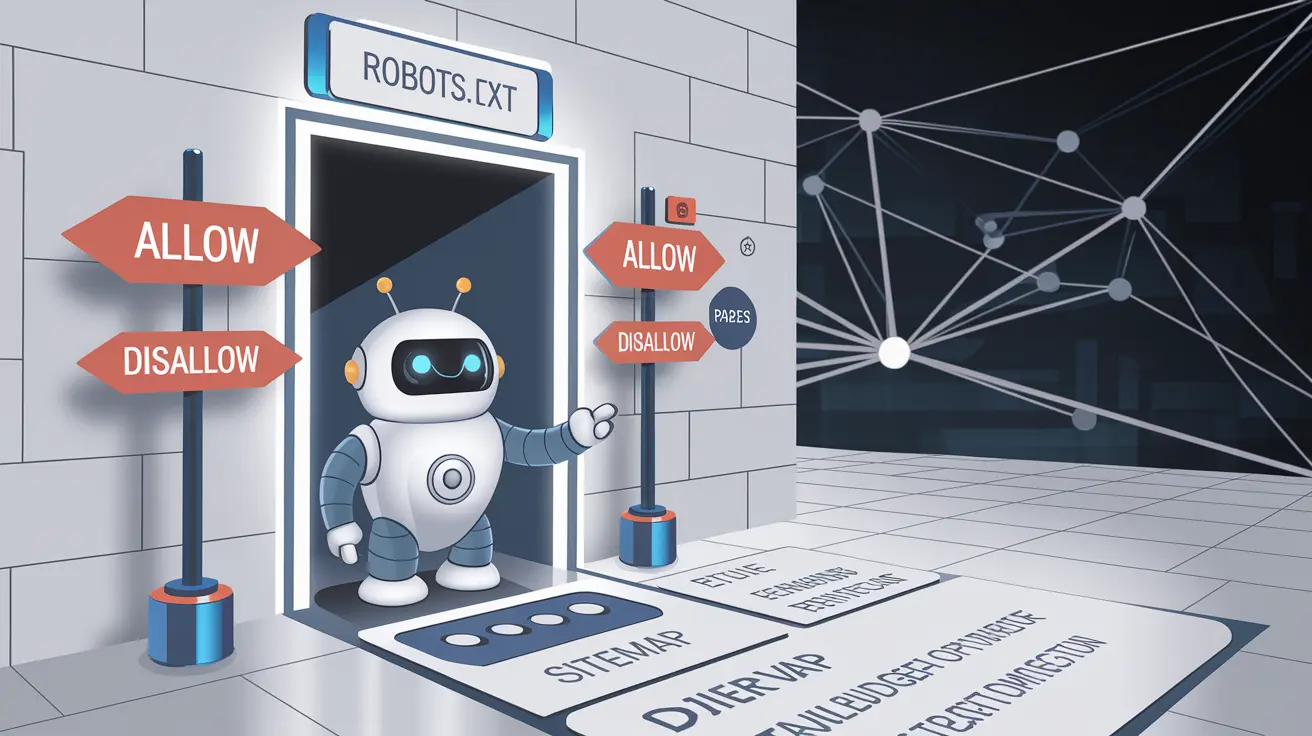

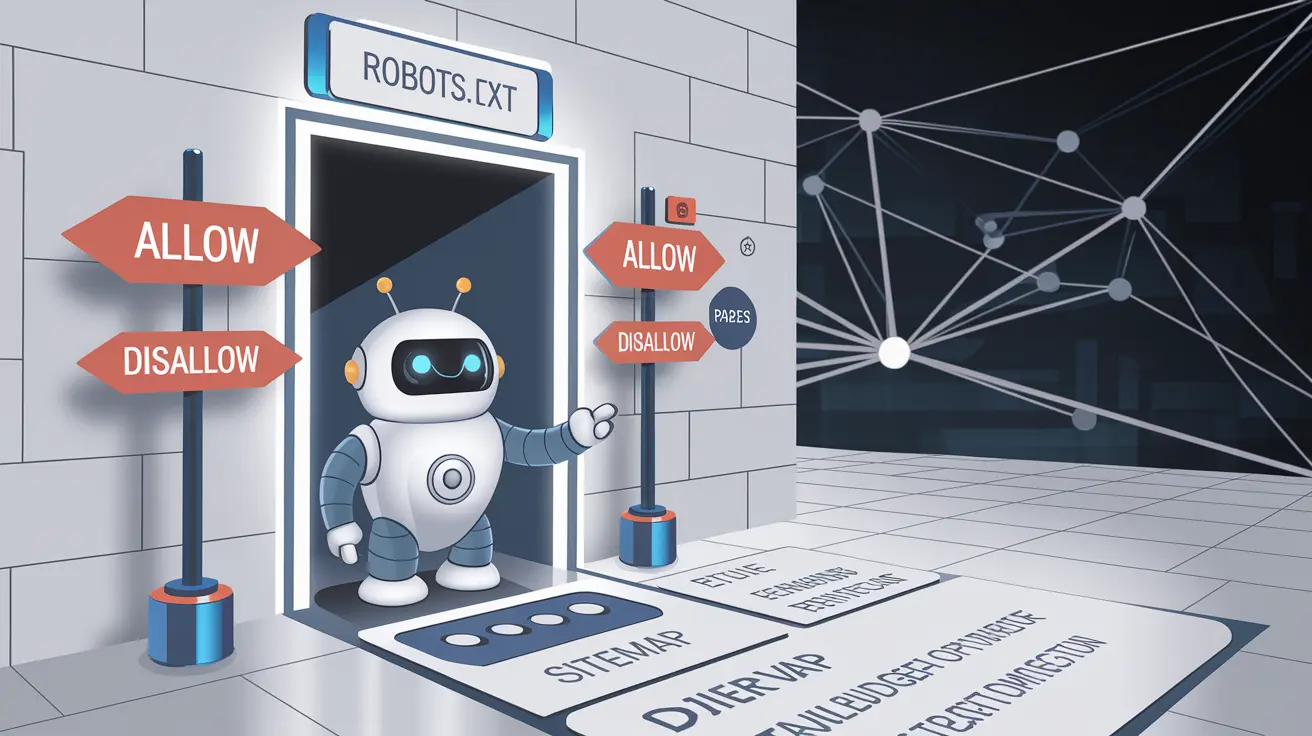

Robots.txt

We begin our journey into controlling search engine crawling with the robots.txt file. This crucial file acts as a gatekeeper, instructing search engines on which parts of your site they can access. By properly

configuring your robots.txt, we can guide crawlers efficiently through our site’s content.

How Googlebot Treats Robots.txt Files

Googlebot, Google’s web crawler, pays close attention to robots.txt directives. We need to understand its behavior to optimize our site’s crawlability. Here’s a quick overview of how Googlebot interprets common robots.txt instructions:

| Directive |

Googlebot’s Action |

| Allow |

Crawls the specified pages |

| Disallow |

Skips the specified pages |

| Noindex |

Ignores (no longer supported) |

| Sitemap |

Discovers XML sitemaps |

Optimize For Crawl Budget!

Our crawl budget is precious, and we must use it wisely. By prioritizing important pages and reducing unnecessary crawls, we can ensure that search engines focus on our most valuable content. Some strategies include:

- Removing low-quality or duplicate pages

- Consolidating similar content

- Improving site speed and performance

Defining URL Parameters in GSC

In

Google Search Console (GSC), we have the power to guide Googlebot’s interpretation of URL parameters. This helps prevent crawling of duplicate content and conserves our crawl budget. We should identify parameters that don’t change page content and instruct Google to ignore them.

Can Crawlers Find all Your Important Content?

Ensuring crawler accessibility is crucial. We need to check that:

- Our site’s architecture is logical and easy to navigate

- Internal linking is robust and purposeful

- There are no orphaned pages or dead-end links

By addressing these points, we make it easier for search engines to discover and index our valuable content.

How do Search Engines Interpret and Store Your Pages?

Can I See How a Googlebot Crawler Sees my Pages?

We can indeed get a glimpse of how Googlebot views our pages, which is crucial for understanding indexing.

Google Search Console offers a valuable tool called “URL Inspection” that allows us to see our pages through Googlebot’s eyes. This tool provides insights into how our content is interpreted and stored in Google’s index.

Here’s a breakdown of what we can learn from the URL Inspection tool:

- Crawl status

- Page resources

- JavaScript execution

- Rendered HTML

- Mobile-friendliness

| Feature |

Description |

| Live Test |

Shows real-time crawling and rendering |

| Indexed Version |

Displays the last indexed version of the page |

| Mobile Usability |

Highlights mobile-specific issues |

| Rich Results |

Indicates presence of structured data |

By utilizing this tool, we can identify potential indexing issues and ensure our pages are being interpreted correctly by search engines.

Are Pages Ever Removed From The Index?

Yes, pages can be removed from search engine indexes for various reasons. Understanding these reasons helps us maintain a healthy, indexed presence on search engines.

Common reasons for page removal include:

- Manual actions by search engines

- Violations of webmaster guidelines

- Prolonged inaccessibility of the page

- Implementation of noindex directives

- Removal requests by website owners

It’s important to note that removal from the index doesn’t always mean permanent exclusion. In many cases, we can take corrective actions to have our pages re-indexed. Regular monitoring of our site’s indexing status through tools like

Google Search Console helps us stay on top of any indexing issues and address them promptly.

Now that we’ve covered how search engines interpret and store our pages, let’s explore how they rank URLs in search results.

Tell Search Engines How to Index Your Site

Robots Meta Directives

We can guide search engines on how to index our site using robots meta directives. These are essential tools in our SEO arsenal, allowing us to communicate directly with search engine crawlers. By implementing these directives, we can control which pages should be indexed and which should be kept out of search results.

Here’s a quick reference table for common robots meta directives:

| Directive |

Purpose |

| index |

Allow indexing of the page |

| noindex |

Prevent indexing of the page |

| follow |

Allow following links on the page |

| nofollow |

Prevent following links on the page |

Meta Directives Affect Indexing, not Crawling

It’s crucial to understand that meta directives primarily influence indexing, not crawling. This means search engines may still crawl our pages even if we’ve used a noindex directive, but they won’t include those pages in their search results. Here’s why this distinction matters:

- Resource allocation: Crawlers still use resources to access noindex pages

- Link equity: Noindex pages can still pass link equity to other pages

- Crawl budget: Excessive noindex pages may impact our site’s crawl budget

WordPress Tip:

For WordPress users, managing indexing is straightforward. We can utilize plugins or modify our theme to implement robots meta directives. Here are some quick tips:

- Use Yoast SEO or All in One SEO Pack plugins for easy management

- Add custom fields to control indexing on a per-page basis

- Modify the header.php file to include site-wide directives

By mastering these indexing techniques, we can ensure that search engines understand and respect our preferences for how our site should appear in search results.

How do Search Engines Rank URLs?

What do Search Engines Want?

What do Search Engines Want?

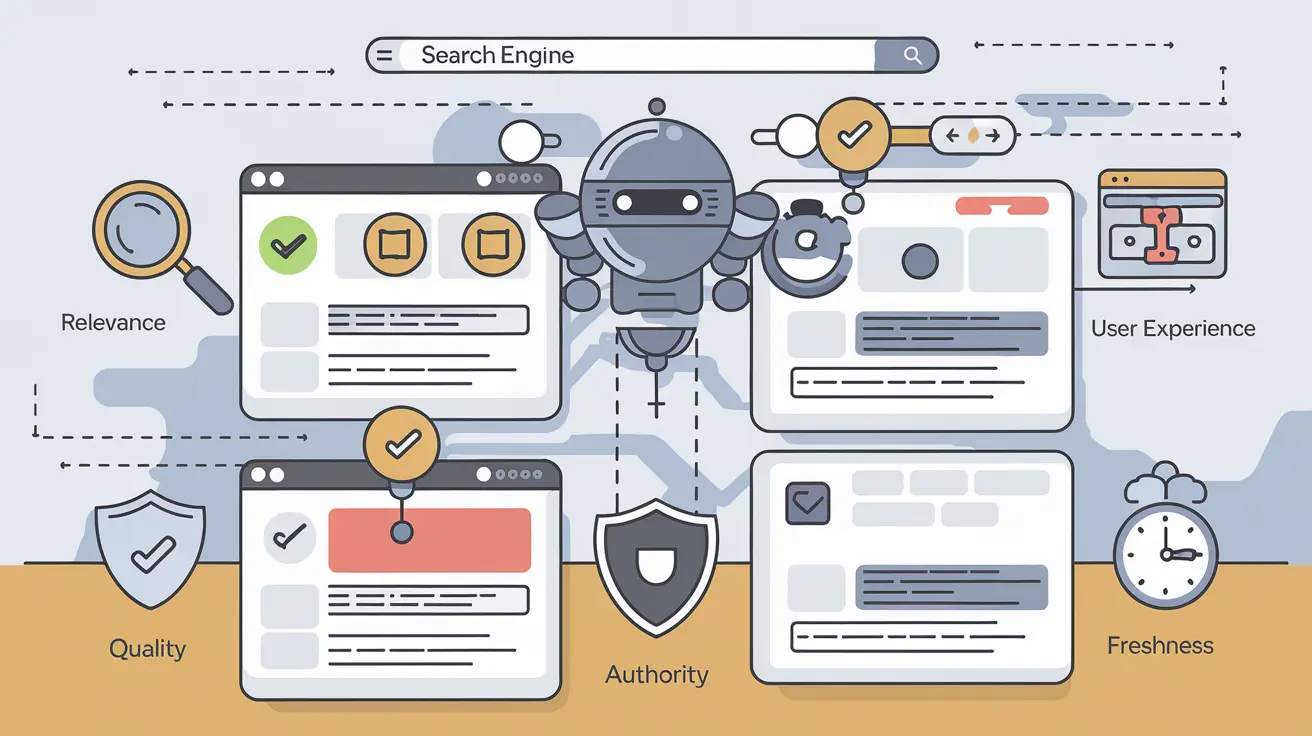

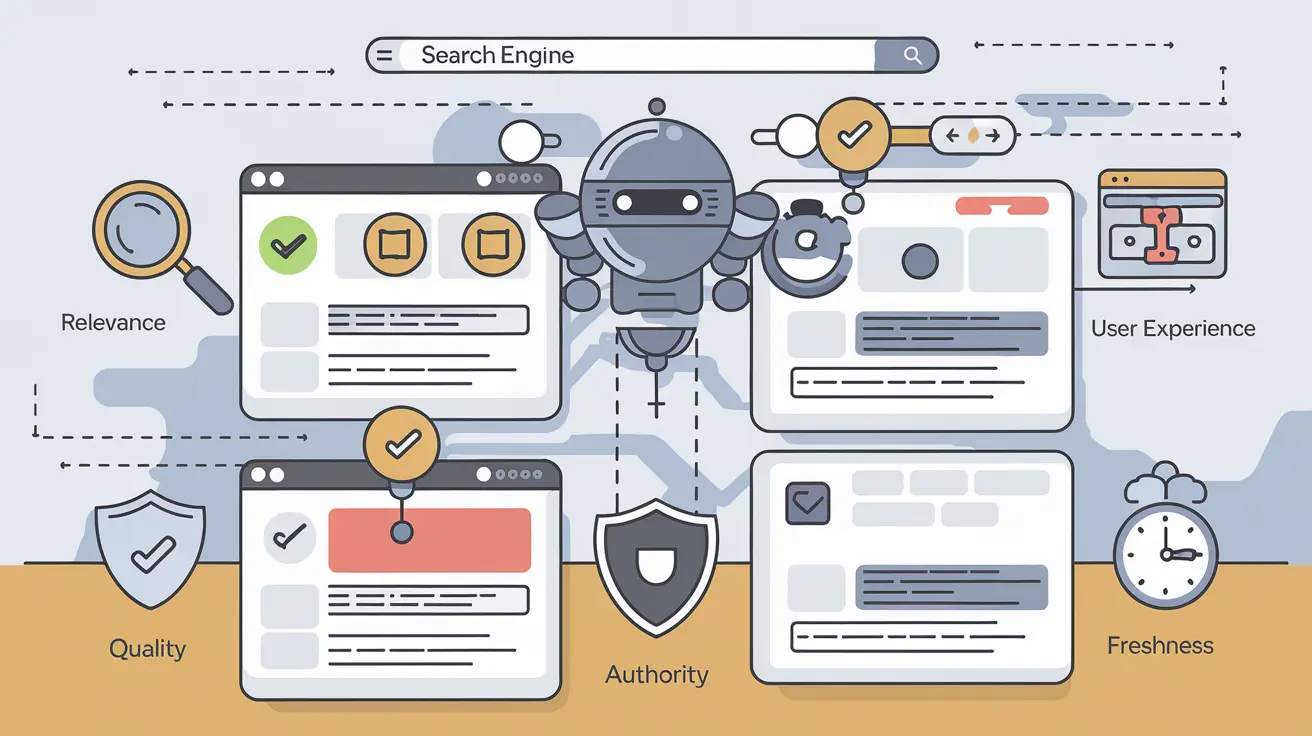

Search engines aim to provide the most relevant and high-quality results to users’ queries. Their primary goal is to satisfy user intent by delivering accurate, helpful, and authoritative information. We can break down their objectives into several key factors:

- Relevance

- Quality

- User Experience

- Authority

- Freshness

Let’s explore these factors in more detail:

| Factor |

Description |

| Relevance |

Content that directly addresses the user’s query |

| Quality |

Well-written, informative, and original content |

| User Experience |

Fast-loading, mobile-friendly, and easy-to-navigate websites |

| Authority |

Trusted sources with backlinks from reputable sites |

| Freshness |

Up-to-date information, especially for time-sensitive topics |

The Role Links Play in SEO

Links continue to be a crucial factor in how search engines rank URLs. We can categorize links into two main types:

- Internal links

- External links (backlinks)

Internal links help search engines understand the structure of our website and distribute link equity. Backlinks, on the other hand, serve as “votes of confidence” from other websites. The quality and relevance of these backlinks significantly impact our search engine rankings.

The Role Content Plays in SEO

Content is king in the world of SEO. High-quality, relevant content helps us:

- Address user intent

- Establish authority in our niche

- Attract natural backlinks

- Improve engagement metrics

We should focus on creating comprehensive, well-researched content that provides value to our audience. This approach not only satisfies search engines but also builds trust with our readers.

Localized Search

Relevance

When it comes to localized search, relevance is a crucial factor that search engines consider. We need to ensure that our business information aligns closely with what users are searching for. This means optimizing our content, products, and services to match local search intent.

To improve relevance:

- Use location-specific keywords in titles, meta descriptions, and content

- Create location-specific pages for each service area

- Include local landmarks, events, or cultural references in your content

Distance

Distance plays a significant role in local search rankings. Search engines aim to provide users with the most convenient options based on their location. We must ensure that our business address is accurately listed and up-to-date across all platforms.

| Factor |

Impact on Local Search |

| Proximity to searcher |

High |

| Service area coverage |

Medium |

| Multiple locations |

Moderate |

Prominence

Prominence refers to how well-known and respected our business is in the local community and online. We can boost our prominence by:

- Gathering positive reviews from satisfied customers

- Maintaining consistent NAP (Name, Address, Phone) information across directories

- Building high-quality local backlinks

- Engaging in local community events and sponsorships

Local Engagement

Local engagement is an increasingly important factor in localized search. We can improve our local engagement by:

- Responding promptly to customer reviews and questions

- Sharing local news and events on our social media platforms

- Participating in local online forums and discussions

- Hosting or sponsoring community events

By focusing on these aspects of localized search, we can significantly improve our visibility in local search results and attract more nearby customers.

Crawling and indexing are fundamental processes that enable search engines to discover, understand, and organize web content. We’ve explored how search engines work, delving into the intricacies of crawling and indexing, and their crucial role in SEO. From understanding how search engines find and interpret your pages to learning how to guide their crawling and indexing behavior, we’ve covered essential strategies to improve your website’s visibility.

As we move forward in the ever-evolving world of SEO, it’s vital to remember that optimizing for crawling and indexing is just the beginning. By implementing the techniques discussed, such as proper site structure, effective use of robots.txt, and strategic XML sitemaps, we can ensure our content is easily discoverable and correctly interpreted by search engines. This foundation sets the stage for improved rankings and increased organic traffic, ultimately helping us achieve our digital marketing goals.

What is Search Engine Crawling?

What is Search Engine Crawling?

What do Search Engines Want?

What do Search Engines Want?